What You Need to Know About the AI Alignment Problem

Reflecting on my conversation with leading AI alignment and ethics expert Brian Christian

Earlier this week, at the Worldwide Developer Conference, Apple and OpenAI announced a new strategic partnership—starting with Apple integrating OpenAI into Siri. On X, Elon warned consumers that partnering with Open AI means Apple is “selling you down the river.”

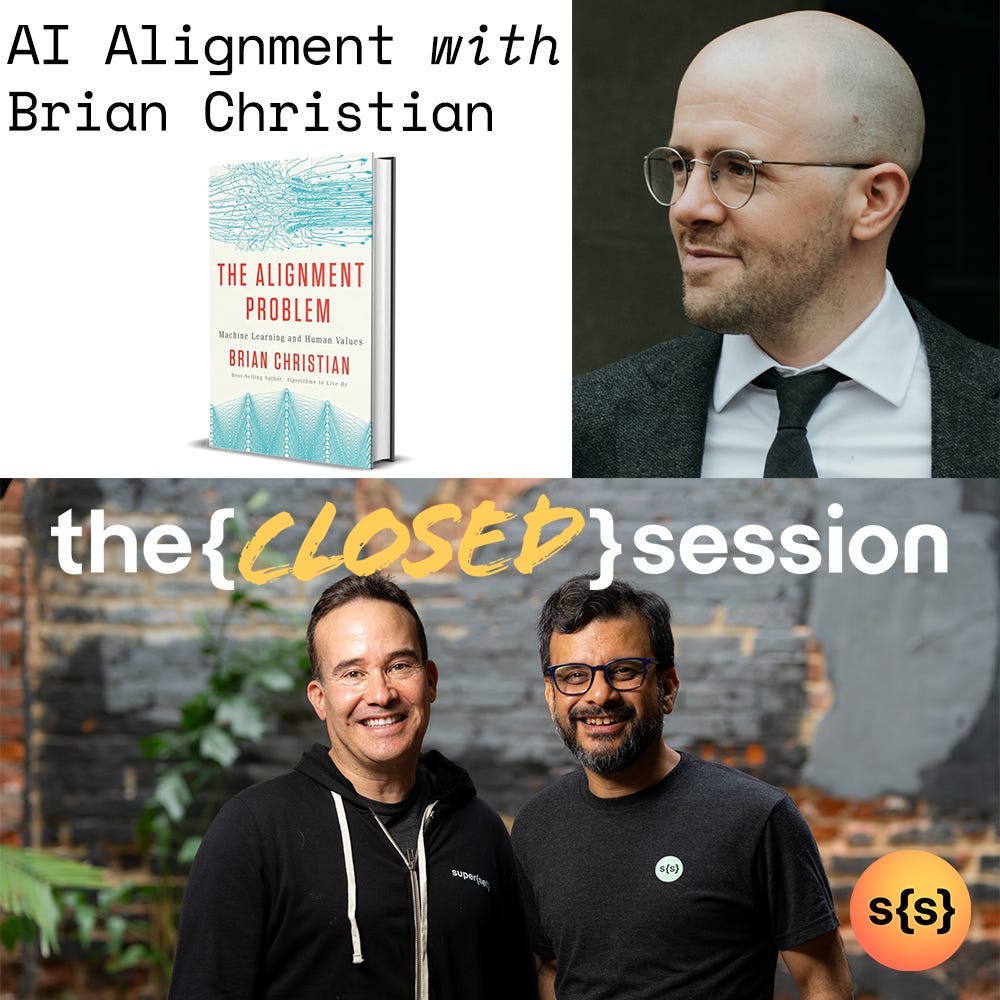

This Apple x OpenAI announcement (and Elon’s response) reminded me of the conversation I had almost exactly 1 year ago with Brian Christian — an acclaimed researcher who studied psychology and computational neuroscience at Oxford, and author of “The Alignment Problem.” Brian’s work explores the human implications of computer science, and “The Alignment Problem” is his latest work.

Some questions we addressed:

What does AI “alignment” actually mean?

What philosophical principles can we apply to make AI less biased?

What is “reinforcement learning” and how does it contribute to AI’s unpredictability?

What is AI’s “frame problem,” and how does that problem affect its ability to understand and respond to specific objects?

What role does human feedback play in ML/AI fine-tuning? What does this mean for AI alignment?

I’m (re)sharing this talk with Brian, especially in the wake of the recent Apple x OpenAI news, because his perspective—which integrates ethics, philosophy, and computer science—is one of the best for mapping the questions + challenges that confront AI in the future.

Understanding The AI “Alignment Problem”

In the realm of technology, the intersection of human desires and the unforeseen consequences of artificial intelligence can lead to some serious collisions. This is the heart of what author Brian Christian calls the “alignment problem”.

I recently had a chance to talk to Brian about his books and his career as a programmer and researcher when he stopped by our second annual super{summit} in New Orleans in May 2023.

The conversation was particularly timely, given the interest in AI in both engineering circles and the broader public. In business, well-meaning executives are pressuring their tech teams to build and train models to interpret data without realizing the challenges inherent in asking AI to “act human.” Afterall, AI doesn’t “think” like we do and if we aren’t extremely careful about spelling out what we want it to do, it can behave in unexpected and harmful ways.

The Problem with AI

Here’s one tragic example:

Autonomous vehicles are designed to navigate through bustling city streets, guided by complex algorithms designed to prioritize passenger safety above all else. But, what happens when a situation arises that the systems have not been trained to identify? Well, this is what happened with one of Uber’s autonomous vehicles in a 2018 fatal accident.

As Brian explained it, in the system that Uber was using, the motion planning system controlling the car was designed to identify pedestrians, cyclists, other cars, and debris – but not a pedestrian walking a bicycle across the street, which is how the object presented. When something doesn’t fit into one of the categories of the object classifier, it can’t make a decision. And because the motion planning system was waiting for the object classifier to tell it what to do, it never decided to apply the brakes.

This is the heart of the alignment problem. It’s perpetuated not by human misunderstanding per se but by human overestimation of the quality of the data upon which they are building their models.

Take for instance ChatGPT, which relies on imitation to observe and predict human behavior in order to assist us. However, AI systems are not bound by the same constraints and limits as humans, leading to misinterpretations of our intentions and what is truly beneficial for us. One of the key issues with AI imitation is that instead of protecting us from our bad habits, these systems tend to amplify them. Moreover, they can push us towards acquiring the bad habits of others, ultimately reinforcing negative behaviors.

Aligning AI

So how do we realign ourselves? Reinforcement learning from human feedback (RLHF) is, as Brian said, “one of the most technically and philosophically significant things to emerge in AI in the last 10 years.” Simply put, RLHF involves humans “grading” AI responses, and the AI in turn learning from its mistakes. For example, humans can rank summaries of articles generated by AI systems. By incorporating human preferences into the reward model, we can shift the objective of AI systems from simply predicting the next token to generating texts that people prefer.

This is a topic I’m particularly interested in, especially in my role as CEO of MarkovML, one of our portfolio companies that is building tools for AI, including tools to assess data health and model quality in a given application. MarkovML is among a handful of the first companies to focus on how you train your models to account for the noisiness in the data (and the labels!) that is used to train the underlying neural network that forms the core of the model. In fact, I’d like to think we are part of the solution to Brian’s alignment problem.

In the weeks since super{summit}, I had been thinking about Brian’s keynote and how the field of machine learning has made remarkable strides - bringing us closer to the creation of intelligent machines. However, it's still essential to recognize the challenges we face in achieving human-level intelligence and ensuring the alignment of AI systems with human values. It’s something we think about every day as a data+AI startup studio hoping to solve persistent problems with innovative technology while also managing ethical considerations and best-in-class data privacy protocols.

Many thanks to Brian for participating in the super{summit} and all of the ways we’ve continued to collaborate since.

👂Brian Christian also appeared on my podcast, The {Closed} Session! Listen Here

Work With Me: Apply to super{set} VECTOR

A 12-week fully-paid launchpad for technical product leaders based in San Francisco to receive direction, build magnitude, and co-explore company creation alongside super{set} - Apply at superset.com/vector